Building AI with MongoDB: Giving Your Apps a Voice

In previous posts in this series, we covered how generative AI and MongoDB are being used to unlock value from data of any modality and in supercharging communications. Put those topics together, and we can start to harness the most powerful communications medium (arguably!) of them all: Voice.

Voice brings context, depth, and emotion in ways that text, images, and video alone simply cannot. Or as the ancient Chinese Proverb tells us, “The tongue can paint what the eyes can’t see.”

The rise of voice technology has been a transformative journey that spans over a century, from the earliest days of radio and telephone communication to the cutting-edge realm of generative AI. It began with the invention of the telephone in the late 19th century, enabling voice conversations across distances. The evolution continued with the advent of radio broadcasting, allowing mass communication through spoken word and music. As technology advanced, mobile communications emerged, making voice calls accessible anytime, anywhere.

Today, generative AI, powered by sophisticated machine learning (ML) models, has taken voice technology to unprecedented levels. The generation of human-like voices and text-to-speech capabilities are one example. Another is the ability to detect sentiment and create summaries from voice communications. These advances are revolutionizing how we interact with technology and information in the age of intelligent software.

In this post, we feature three companies that are harnessing the power of voice with generative AI to build completely new classes of user experiences:

-

Xoltar uses voice along with vision to improve engagement and outcomes for patients through clinical treatment and recovery.

-

Cognigy puts voice at the heart of its conversational AI platform, integrating with back-office CRM, ERP, and ticketing systems for some of the world’s largest manufacturing, travel, utility, and ecommerce companies.

-

Artificial Nerds enables any company to enrich its customer service with voice bots and autonomous agents.

Let's learn more about the role voice plays in each of these very different applications.

Check out our AI resource page to learn more about building AI-powered apps with MongoDB.

GenAI companion for patient engagement and better clinical outcomes

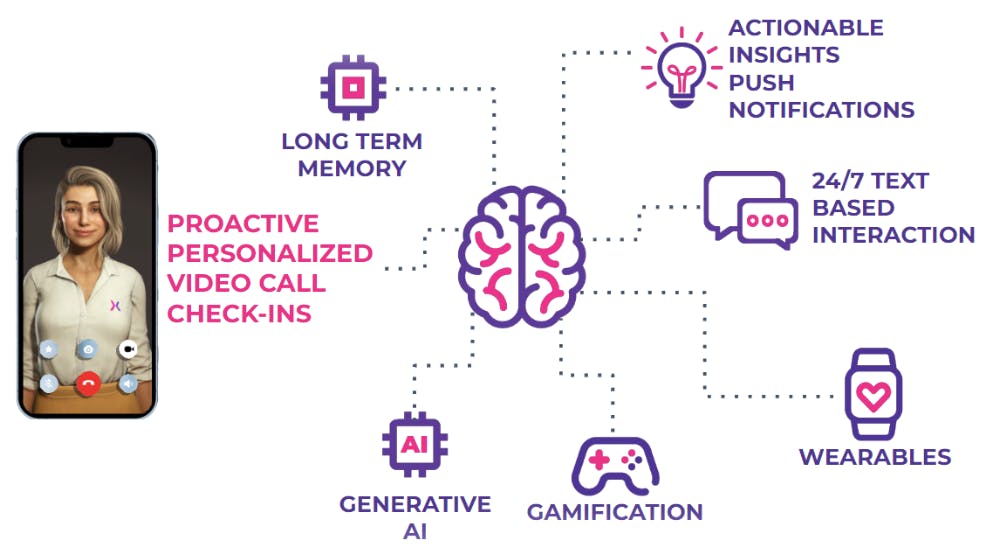

XOLTAR is the first conversational AI platform designed for long-lasting patient engagement. XOLTAR’s hyper-personalized digital therapeutic app is led by Heather, XOLTAR’s live AI agent. Heather is able to conduct omni-channel interactions, including live video chats. The platform is able to use its multimodal architecture to better understand patients, get more data, increase engagement, create long-lasting relationships, and ultimately achieve real behavioral changes.

About 50% of patients fail to stick to prescribed treatments. Through its app and platform, XOLTAR is working to change this, improving outcomes for both patients and practitioners. It provides physical and emotional well-being support through a course of treatment, adherence to medication regimes, monitoring post-treatment recovery, and collection of patient data from wearables for remote analysis and timely interventions.

Powering XOLTAR is a sophisticated array of state-of-the-art machine learning models working across multiple modalities — voice and text, as well as vision for visual perception of micro-expressions and non-verbal communication. Fine-tuned LLMs coupled with custom multilingual models for real-time automatic speech recognition and various transformers are trained and deployed to create a truthful, grounded, and aligned free-guided conversation.

XOLTAR’s models personalize each patient’s experience by retrieving data stored in MongoDB Atlas. Taking advantage of the flexible document model, XOLTAR developers store both structured data, such as patient details and sensor measurements from wearables, alongside unstructured data, such as video transcripts. This data provides both long-term memory for each patient as well as input for ongoing model training and tuning.

MongoDB also powers XOLTAR’S event-driven data pipelines. Follow-on actions generated from patient interactions are persisted in MongoDB, with Atlas Triggers notifying downstream consuming applications so they can react in real-time to new treatment recommendations and regimes.

Through its participation in the MongoDB AI Innovators program, XOLTAR’s development team receives access to free Atlas credits and expert technical support, helping them de-risk new feature development.

How Cognigy built a leading conversational AI solution

Cognigy delivers AI solutions that empower businesses to provide exceptional customer service that is instant, personalized, in any language, and on any channel. Its main product, Cognigy.AI, allows companies to create AI Agents, improving experiences through smart automation and natural language processing. This powerful solution is at the core of Cognigy's offerings, making it easy for businesses to develop and deploy intelligent voice and chatbots.

Developing a conversational AI system poses challenges for any company. These solutions must effectively interact with diverse systems like CRMs, ERPs, and ticketing systems. This is where Cognigy introduces the concept of a centralized platform. This platform allows you to construct and deploy agents through an intuitive low-code user interface.

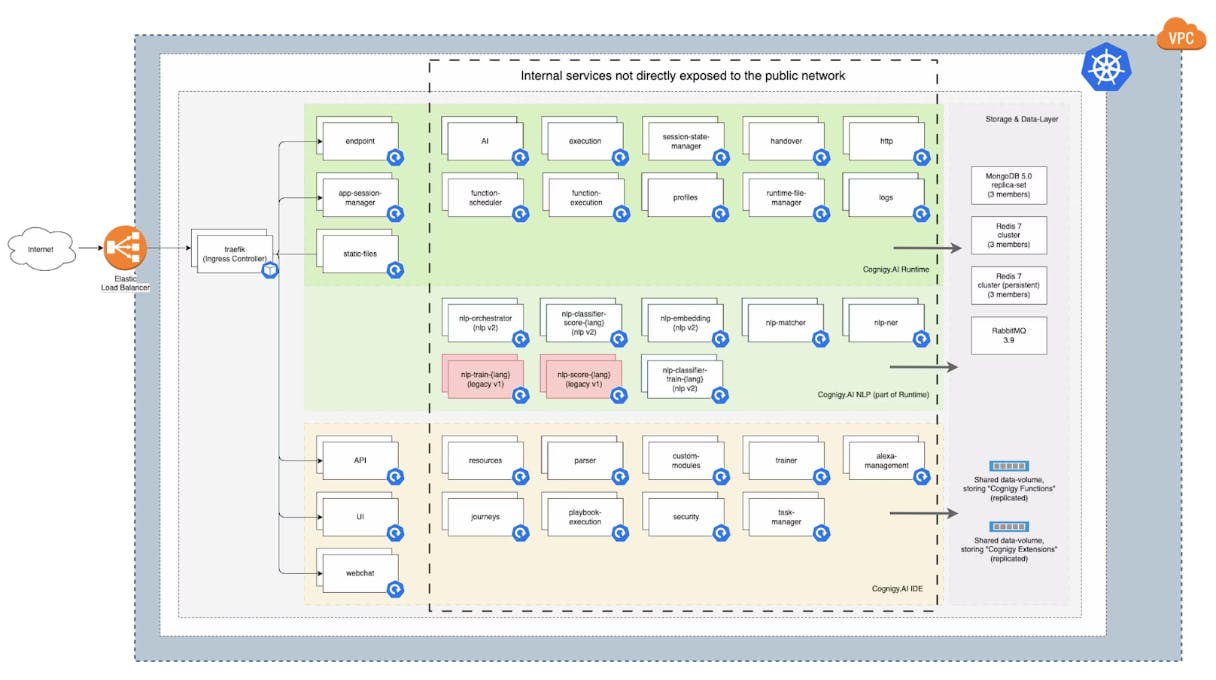

Cognigy took a deliberate approach when constructing the platform, employing a composable architecture model, as depicted in Figure 1 below. To achieve this, it designed over 30 specialized microservices, adeptly orchestrated through Kubernetes. These microservices were strategically fortified with MongoDB's replica sets, spanning across three availability zones. In addition, sophisticated indexing and caching strategies were integrated to enhance query performance and expedite response times.

MongoDB has been a driving force behind Cognigy's unprecedented flexibility and scalability and has been instrumental in bringing groundbreaking products like Cognigy.AI to life.

Check out the Cognigy case study to learn more about their architecture and how they use MongoDB.

The power of custom voice bots without the complexity of fine-tuning

Founded in 2017, Artificial Nerds assembled a group of creative, passionate, and "nerdy" technologists focused on unlocking the benefits of AI for all businesses. Its aim was to liberate teams from repetitive work, freeing them up to spend more time building closer relationships with their clients.

The result is a suite of AI-powered products that improve customer sales and service. These include multimodal bots for conversational AI via voice and chat along with intelligent hand-offs to human operators for live chat. These are all backed by no-code functions to integrate customer service actions with backend business processes and campaigns.

Originally the company’s ML engineers fine-tuned GPT and BERT language models to customize its products for each one of its clients. This was a time-consuming and complex process. The maturation of vector search and tooling to enable Retrieval-Augmented Generation (RAG) has radically simplified the workflow, allowing Artificial Nerds to grow its business faster.

Artificial Nerds started using MongoDB in 2019, taking advantage of its flexible schema to provide long-term memory and storage for richly structured conversation history, messages, and user data. When dealing with customers, it was important for users to be able to quickly browse and search this history. Adopting Atlas Search helped the company meet this need. With Atlas Search, developers were able to spin up a powerful full-text index right on top of their database collections to provide relevance-based search across their entire corpus of data. The integrated approach offered by MongoDB Atlas avoided the overhead of bolting on a separate search engine and creating an ETL mechanism to sync with the database. This eliminated the cognitive overhead of developing against, and operating, separate systems.

The release of Atlas Vector Search unlocks those same benefits for vector embeddings. The company has replaced its previously separate standalone vector database with the integrated MongoDB Atlas solution. Not only has this improved the productivity of its developers, but it has also improved the customer experience by reducing latency 4x.

Artificial Nerds is growing fast, with revenues expanding 8% every month. The company continues to push the boundaries of customer service by experimenting with new models including the Llama 2 LLM and multilingual sentence transformers hosted in Hugging Face. Being part of the MongoDB AI Innovators program helps Artificial Nerds stay abreast of all of the latest MongoDB product enhancements and provides the company with free Atlas credits to build new features.

Getting started

Check out our MongoDB for AI page to get access to all of the latest resources to help you build.

We see developers increasingly adopting state-of-the-art multimodal models and MongoDB Atlas Vector Search to work with data formats that have previously been accessible only to those organizations with access to the very deepest data science resources. Check out some examples from our previous Building AI with MongoDB blog post series here:

-

Building AI with MongoDB: first qualifiers includes AI at the network edge for computer vision and augmented reality, risk modeling for public safety, and predictive maintenance paired with Question-Answering generation for maritime operators.

-

Building AI with MongoDB: compliance to copilots features AI in healthcare along with intelligent assistants that help product managers specify better products and sales teams compose emails that convert 2x higher.

-

Building AI with MongoDB: unlocking value from multimodal data showcases open source libraries that transform unstructured data into a usable JSON format, entity extraction for contracts management, and making sense of “dark data” to build customer service apps.

-

Building AI with MongoDB: Cultivating Trust with Data covers three key customer use cases of improving model explainability, securing generative AI outputs, and transforming cyber intelligence with the power of MongoDB.

-

Building AI with MongoDB: Supercharging Three Communication Paradigms features developer tools that bring AI to existing enterprise data, conversational AI, and monetization of video streams and the metaverse.

There is no better time to release your own inner voice and get building!